Artificial intelligence, its implementation and usage are rapidly transforming, steering enterprises away from static AI models toward dynamic, autonomous systems known as AI Agents. As companies harness the power of these intelligent entities, they not only aim to enhance efficiency but also strive for adaptability and proactive decision-making. AI agents are more than just a buzzword; they represent a significant evolution in how organizations utilize technology to achieve their goals.

In simple terms, AI agents are software entities designed to perceive their environment, reason about it, act on it, and continuously learn from their experiences. Unlike traditional AI models that react to predefined conditions, these agents operate autonomously, driven by goals and real-time insights.

According to a Gartner report, over 33% of enterprise software will integrate agentic AI by 2028, up from less than 1% today, underscoring their impending importance in business operations. This article aims to provide a thorough exploration of AI agents, covering critical components that define their architecture, memory systems, reasoning mechanisms, tool interactions, and autonomy levels. We promise a ground-up explanation that connects theoretical frameworks with practical applications in enterprise settings.

What Are AI Agents? A Clear, Practical Definition

To grasp the essence of AI agents, it is crucial to establish a clear definition. AI agents are autonomous software entities capable of independently planning, deciding, and executing actions to achieve specified goals.

Here are the core characteristics that define AI agents:

- Goal-oriented behavior: AI agents prioritize objectives and adjust their actions based on changing circumstances.

- Context awareness: They understand specific environments and situations, making them effective in various application areas.

- Continuous feedback loops: Learning from actions and outcomes allows these agents to refine their processes over time.

Comparatively, consider the difference between chatbots and AI agents. Chatbots usually operate within strict operational confines, providing scripted responses. On the other hand, AI agents utilize complex workflows to interpret inputs, reason about decisions, and perform tasks autonomously. A noteworthy statistic from McKinsey indicates that agent-based automation can reduce operational effort by 30-50% in knowledge-heavy workflows, emphasizing its potential impact.

Real-world examples demonstrate the versatility of AI agents:

- Customer support agents: Handling inquiries and resolving issues in real time.

- DevOps remediation agents: Automating corrective measures in IT environments.

- Financial reconciliation agents: Streamlining processes for accuracy and efficiency.

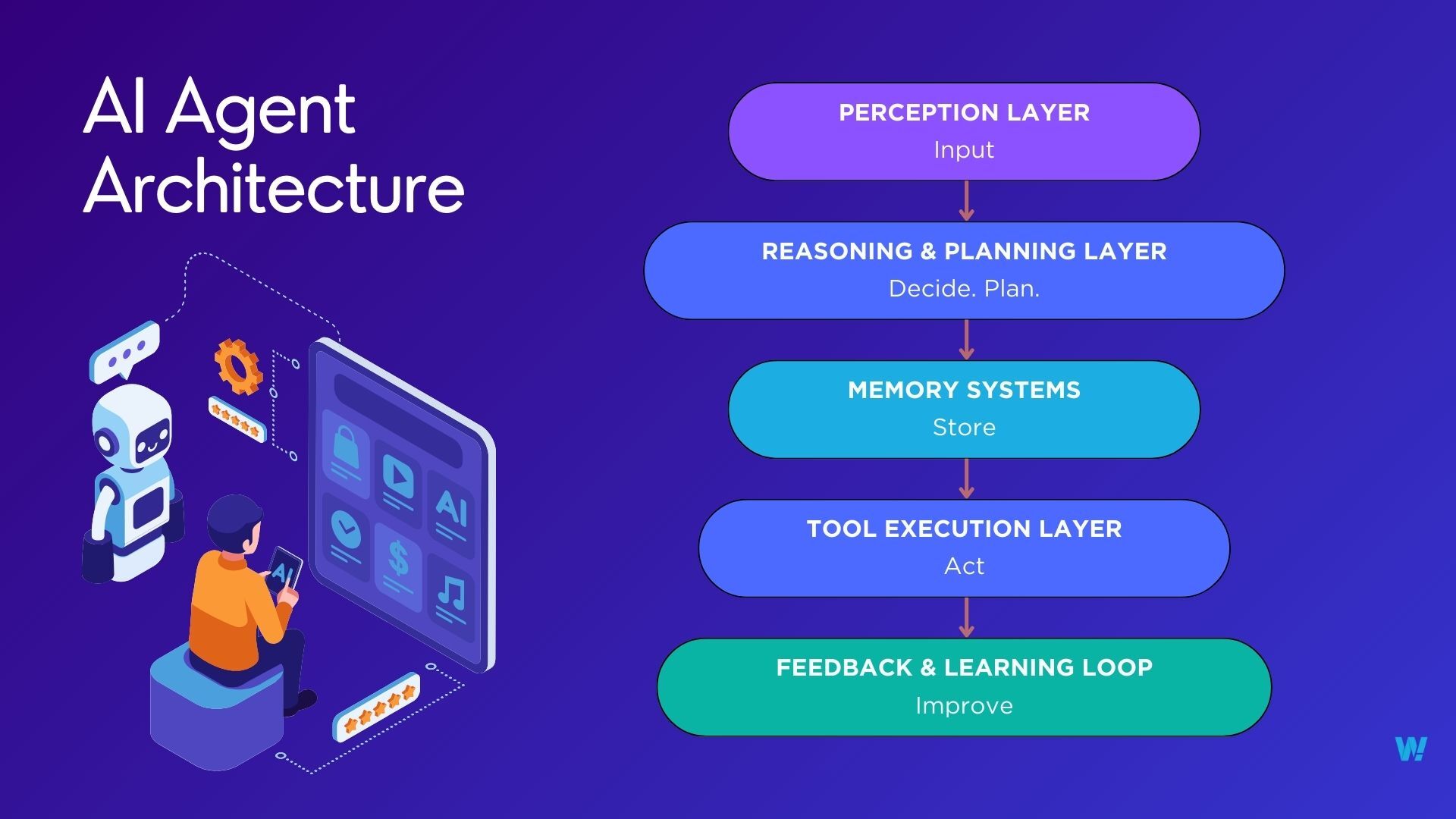

Core Components of an AI Agent Architecture

Modern AI agents are no longer monolithic systems; they are built on modular, layered architectures that enable flexibility, scalability, and continuous improvement. This modular design mirrors how complex human tasks are decomposed in cognitive science — perception, reasoning, memory, action, and learning each serve a distinct purpose, yet interoperate seamlessly.

-

Perception Layer:

At the foundation of any AI agent is the ability to sense and interpret its environment. The perception layer consists of interfaces and subsystems that ingest diverse data formats — text, speech, visual feeds, sensor logs, user interactions, and structured records. This layer often employs natural language understanding (NLU), computer vision models, and signal processing techniques to normalize raw inputs into machine‑understandable representations. For example, a customer support agent might parse chat transcripts and voice inputs, while a robotic agent would combine camera and lidar data to understand physical surroundings. High‑quality perception models reduce ambiguity and improve downstream reasoning accuracy. -

Reasoning & Planning Layer:

This component is the “cognitive core” of the agent. It interprets perceived data, integrates it with stored knowledge, and formulates a sequence of actions. Reasoning engines leverage symbolic logic, probabilistic models, and large language models (LLMs) to infer intent and plan tasks. Planning modules, often inspired by classical AI techniques such as Markov decision processes (MDPs) or hierarchical task networks (HTNs), help the agent map from a current state to goal states through actionable steps. This layer balances short‑term tactical responses with long‑term strategic goals, which is critical in scenarios like automated scheduling, dynamic resource allocation, or multi‑step workflows. -

Memory Systems:

Memory systems provide persistent context that gives AI agents continuity across interactions and time. Unlike stateless models, agents with memory can recall past user preferences, historical decisions, and environmental changes. This includes short‑term memory for the current session and long‑term memory for user profiles, task histories, and learned heuristics. Techniques range from simple key‑value stores to structured databases and vector embeddings for semantic recall. Memory enables personalization, reduces repetitive queries, and supports more coherent multi‑turn conversations — a capability shown to improve user satisfaction in conversational systems by over 30% in enterprise deployments. -

Tool Execution Layer:

Agents do not operate in isolation; they must effect change in external systems. The tool execution layer provides connectivity to APIs, databases, automation scripts, and enterprise services. Whether triggering a CRM update, fetching a document, or launching a support ticket, this layer ensures the agent can interact with real‑world infrastructure securely and efficiently. Abstractions such as API adapters, secure credential stores, and orchestrators help manage these integrations while enforcing governance policies. -

Feedback & Learning Loop:

AI agents must adapt and improve over time. The feedback and learning loop ingests signals from outcomes, user feedback, telemetry, and performance metrics to refine behavior. This can involve retraining models, updating memory representations, or adjusting reasoning heuristics. Closed‑loop learning ensures that agents evolve with changing environments and user expectations, which is especially critical in fast‑moving domains like e-commerce, customer support, and operational automation. Monitoring frameworks and continuous evaluation pipelines are key enablers of this adaptive capability.

Research from Stanford indicates that modular agent architectures outperform monolithic pipelines by approximately 40% in task completion accuracy, highlighting the effectiveness of this design.

| Component | Purpose | Enterprise Example |

|---|---|---|

| Perception | Understand inputs | Parsing tickets, logs |

| Reasoning | Decide next action | Task planning |

| Memory | Retain context | Long-term user history |

| Tools | Execute actions | APIs, databases |

| Feedback | Improve outcomes | Reinforcement signals |

Perception Layer: How AI Agents Understand the World

The perception layer is crucial for how AI agents understand and interact with their environment. Agents can process various input types, including:

- Text, voice, and images

- Logs, metrics, and APIs

Large Language Models (LLMs) and multimodal models play a significant role in expanding the capabilities of perception in AI agents. Grounding inputs with structured data is vital for improving the clarity and accuracy of the interpretations.

According to Google Research, multimodal agents can achieve up to 2x improvement in task success compared to text-only agents. This enhancement is particularly relevant in enterprise environments, which may leverage inputs from:

- CRM events

- System alerts

- User behavior signals

Memory in AI Agents: Short-Term, Long-Term & Episodic Memory

Memory plays a foundational role in enabling AI agents to behave consistently, adapt over time, and operate beyond single-turn interactions. Modern agent architectures typically rely on three complementary types of memory, each serving a distinct purpose in decision-making and learning.

-

Short-term memory:

This captures the immediate conversation state, task variables, and contextual signals required to complete the current objective. It enables the agent to maintain coherence across multi-step interactions, track user intent, and respond appropriately as tasks evolve within a session. -

Long-term memory:

Long-term memory allows agents to persist knowledge across sessions using embeddings stored in vector databases. This memory layer supports personalization, recall of historical interactions, domain knowledge retrieval, and continuity, making the agent increasingly effective the more it is used. -

Episodic memory:

Episodic memory records sequences of actions, decisions, and their outcomes. By referencing past successes and failures, agents can refine future strategies, avoid repeated mistakes, and improve planning quality—particularly in complex, multi-step workflows.

Technologies like Pinecone, Weaviate, and FAISS facilitate scalable recall through vector databases, providing a robust framework for long-term data retention. Agents with persistent memory show 35-45% improvement in multi-step reasoning tasks, as demonstrated by MIT CSAIL.

| Memory Type | Stored Data | Technology |

|---|---|---|

| Short-term | Context window | LLM prompt state |

| Long-term | Knowledge, history | Vector DB |

| Episodic | Actions & outcomes | Logs + embeddings |

Reasoning & Planning: How AI Agents Decide What to Do Next

At the heart of every capable AI agent lies its reasoning and planning system—the mechanism that transforms raw inputs into intentional, goal-driven actions. Unlike traditional automation, AI agents don’t simply follow predefined rules. Instead, they continuously interpret context, evaluate options, and decide the next best step based on both short-term goals and long-term objectives. This is what enables agents to operate autonomously in dynamic, real-world environments.

Core Reasoning Paradigms Used by AI Agents

Modern AI agents rely on advanced reasoning techniques that go beyond single-response prompting:

-

Chain-of-Thought (CoT)

The agent breaks down a problem into sequential reasoning steps before arriving at a conclusion. This improves transparency and accuracy, especially for multi-step tasks such as analysis, decision-making, or logical inference. -

Tree-of-Thought (ToT)

Instead of following one linear path, the agent explores multiple reasoning branches in parallel, evaluates outcomes, and selects the optimal path. Research from Princeton shows that Tree-of-Thought–based agents can improve complex problem-solving accuracy by up to 70% compared to single-pass reasoning. -

ReAct (Reason + Act)

This hybrid approach interleaves reasoning with action. The agent reasons about a task, executes an action (such as calling an API or querying a database), observes the result, and then continues reasoning. This makes agents more adaptive in real-world workflows.

Planning Loops: From Goals to Execution

Reasoning alone is not sufficient—agents also require structured planning loops to execute tasks effectively. These loops typically involve:

- Goal decomposition: Breaking high-level objectives into smaller, manageable sub-goals.

- Subtask prioritization: Determining execution order based on urgency, dependencies, and impact.

- Iteration and re-planning: Adjusting plans dynamically as new data or constraints emerge.

This continuous plan–act–observe–refine cycle allows AI agents to function reliably even when conditions change.

Enterprise Applications of Agentic Reasoning

In enterprise settings, reasoning and planning capabilities unlock high-impact use cases, including:

- Procurement optimization, where agents evaluate vendors, pricing, and compliance constraints before recommending actions.

- Incident resolution, where agents triage alerts, correlate signals across systems, and propose remediation steps.

- Compliance workflows, where agents interpret policies, audit data, and flag risks proactively.

By combining structured reasoning frameworks with adaptive planning loops, AI agents move beyond task execution into true decision intelligence, making them a foundational technology for the next generation of enterprise systems.

Tap into our expert talent pool to build cutting-edge AI solutions.

Tools & Action Layers: How AI Agents Interact with Systems

The tools and action layers are where AI agents translate reasoning into actionable steps. Tools can encompass a wide array of components, including APIs, databases, SaaS systems, and scripts for executing tasks.

Key aspects include:

- Tool calling: Method for agents to invoke specific functions.

- Function calling: Direct requests to perform particular actions.

- Action validation: Ensures actions align with intent and are executed correctly.

However, there are inherent risks associated with uncontrolled tool execution, which necessitate the implementation of governance frameworks. According to IBM, agentic tool orchestration can reduce manual operations effort by about 40% in IT workflows.

Examples of this in action include:

- Creating Jira tickets

- Executing cloud scripts

- Updating CRM records

| Agent Tools | Traditional Automation |

|---|---|

| Dynamic and adaptable | Static and predefined |

| Proactive responses | Reactive scripts |

Autonomy Levels in AI Agents: From Assistive to Fully Autonomous

Autonomy defines how independently an AI agent can operate within a system. Rather than being a binary choice, autonomy exists on a spectrum, allowing enterprises to adopt AI agents gradually based on risk tolerance, regulatory needs, and operational maturity. These levels include:

-

Assistive agents (Human-in-the-loop)

These agents support users by generating recommendations, insights, or next-step suggestions but require explicit human approval before acting. They are commonly used in customer support, analytics, and content generation, where accuracy and oversight are critical. -

Semi-autonomous agents (Human-on-the-loop)

Semi-autonomous agents can execute predefined tasks independently while escalating exceptions or sensitive decisions for human review. This model is well-suited for workflows like incident management, procurement approvals, or routine IT operations where speed matters but governance is still required. -

Fully autonomous agents (Human-out-of-the-loop)

Fully autonomous agents operate end-to-end based on predefined policies, constraints, and guardrails. They continuously plan, act, and self-correct without manual intervention, making them ideal for high-frequency, low-risk tasks such as system monitoring, automated remediation, or dynamic resource allocation.

A survey by Accenture found that 72% of enterprises prefer human-in-the-loop systems during the early stages of deploying AI agents, highlighting the need for governance, guardrails, and risk assessment in designing these systems.

Also read: Generative AI Implementation: A Roadmap for Enterprises

Single-Agent vs Multi-Agent Systems

AI agents can be deployed either as standalone entities or as coordinated groups, and the choice between single-agent and multi-agent systems has a direct impact on scalability, reliability, and problem-solving capability. A single-agent system is designed to perceive inputs, reason, act, and learn within a self-contained loop. This approach works well for narrowly scoped tasks where decision paths are linear and dependencies are minimal, such as answering user queries, summarizing documents, or triggering predefined workflows.

However, as enterprise use cases grow in complexity—spanning multiple systems, approvals, and decision layers—single agents can become bottlenecks. This is where multi-agent systems offer a structural advantage. In a multi-agent setup, responsibilities are distributed across specialized agents that collaborate toward a shared goal. Each agent focuses on a specific function, reducing cognitive load and improving overall system throughput.

Multi-agent architectures borrow from principles of swarm intelligence, where collective behavior emerges from coordinated but independent actors. Instead of one agent attempting to plan, execute, validate, and optimize simultaneously, tasks are broken down and handled in parallel. This division of labor leads to faster execution, higher accuracy, and greater resilience when handling failures or unexpected inputs.

Single-Agent vs. Multi-Agent Systems: Key Differences

| Dimension | Single-Agent Systems | Multi-Agent Systems |

|---|---|---|

| Task Scope | Best suited for well-defined, linear workflows where tasks follow a predictable sequence. | Designed for complex, multi-step workflows with dependencies, parallel execution, and coordination across tasks. |

| Scalability | Scales vertically by allocating more compute, memory, or model capacity to a single agent. | Scales horizontally by adding specialized agents (planner, executor, validator, reviewer) as workload increases. |

| Fault Tolerance | A failure or error can halt execution entirely, requiring manual intervention or restart. | More resilient—tasks can be reassigned or rerouted if one agent fails or underperforms. |

| Decision Quality | Decisions rely on a single reasoning path, increasing the risk of blind spots or errors. | Higher decision quality through agent collaboration, critique loops, validation, and consensus-building mechanisms. |

Enterprise data supports this architectural shift. A recent OpenAI study found that multi-agent systems improved throughput by nearly 60% for complex workflows, particularly those involving planning, execution, and validation stages. As a result, organizations are increasingly adopting multi-agent patterns for use cases such as automated incident response, procurement orchestration, financial reconciliation, and large-scale compliance workflows.

In practice, many mature AI platforms start with single-agent designs and evolve into multi-agent systems as complexity, scale, and autonomy requirements increase.

Real-World Enterprise Use Cases of AI Agents

AI agents are increasingly being adopted in various industries, demonstrating their effectiveness in streamlining processes. Here are some illustrative use cases:

- Customer support resolution agents: Automate responses to frequently asked questions, enabling faster resolution times.

- Finance reconciliation agents: Facilitate the reconciliation of discrepancies to improve accuracy and compliance.

- DevOps remediation agents: Automatically address issues in IT systems, reducing downtime and manual oversight.

- Sales & CRM copilots: Assist sales teams by managing leads and customer interactions more efficiently.

Deloitte estimates that implementing agentic workflows can reduce operational costs by 20-35% in large enterprises, evidencing their potential economic impact.

Challenges & Risks in AI Agent Systems

While AI agents unlock powerful automation and decision-making capabilities, their deployment introduces a new class of technical, operational, and governance risks that enterprises must proactively address. Unlike traditional software, AI agents operate probabilistically, interact with external tools, and may act autonomously—making risk management a first-class design concern rather than an afterthought.

Hallucinations & Incorrect Reasoning

AI agents may generate confident but incorrect outputs, especially when operating with incomplete context or ambiguous instructions. In enterprise workflows—such as compliance reviews, procurement decisions, or incident response—these hallucinations can lead to faulty actions, regulatory exposure, or operational delays. The risk increases when agents are chained across multiple reasoning steps, where early errors can compound downstream.

Tool Misuse & Unsafe Actions

Agents equipped with tool access (APIs, databases, ticketing systems, cloud infrastructure) may execute unintended or unsafe actions if guardrails are weak. For example, an agent may trigger incorrect database updates, send unauthorized communications, or overstep approval boundaries. This risk is amplified in autonomous or semi-autonomous systems where real-world actions occur without immediate human validation.

Feedback Loops & Behavioral Drift

AI agents learn from prior interactions, logs, and outcomes. Without careful controls, this can create negative feedback loops—where incorrect assumptions reinforce themselves over time. Behavioral drift may cause agents to deviate from intended objectives, particularly in long-running systems such as customer support agents or operational copilots.

Security, Privacy & Access Control

AI agents often require broad access to systems and sensitive data, raising concerns around identity management, least-privilege enforcement, and data leakage. Poorly scoped permissions or insecure prompt handling can expose confidential information or create attack surfaces for prompt injection and adversarial misuse.

Cost Unpredictability & Operational Overhead

Agent-based systems can incur highly variable costs due to recursive reasoning, tool calls, multi-agent orchestration, and memory storage. Without rate limits, execution budgets, and observability, enterprises may face unexpected infrastructure and API expenses—especially at scale.

Mitigation strategies typically include retrieval grounding (RAG), policy-based tool execution, human-in-the-loop checkpoints, sandboxed environments, cost ceilings, and continuous monitoring. Successful AI agent deployments treat risk management as a core architectural principle—balancing autonomy with accountability to ensure trust, safety, and long-term sustainability.

Also read: RAG vs Fine-Tuning : Which One Should You Use?

Best Practices for Designing Enterprise-Grade AI Agents

When developing AI agents for enterprise applications, several best practices should be taken into account: Designing AI agents for enterprise environments requires more than just strong models—it demands architectural discipline, governance, and operational rigor. The following best practices help ensure that AI agents remain reliable, secure, and scalable as they move from experimentation to production.

- Modular architecture is foundational to enterprise-grade AI agents. By separating concerns—such as perception, reasoning, memory, tool execution, and policy enforcement—teams can evolve individual components without destabilizing the entire system. This modularity enables faster upgrades (for example, swapping out an LLM or vector database), easier debugging, and smoother AI integration with existing enterprise systems like CRMs, ERPs, and data warehouses.

- Observability is critical once agents begin making autonomous decisions. Enterprises must be able to trace why an agent acted in a certain way, which tools it invoked, and what data influenced its reasoning. Logging agent decisions, tool calls, latency, and failure points enable teams to detect drift, identify hallucinations early, and meet audit or compliance requirements. Without strong observability, AI agents quickly become opaque systems that are difficult to trust or govern.

- Human-in-the-loop (HITL) mechanisms provide a safety net for high-impact workflows. Rather than full autonomy from day one, enterprises often gate sensitive actions—such as financial approvals, customer communications, or system changes—behind human validation. Over time, as confidence in the agent grows, approval thresholds can be adjusted. This phased autonomy approach reduces risk while still delivering productivity gains.

- Policy enforcement ensures that AI agents operate within defined ethical, legal, and operational boundaries. Policies can restrict which tools an agent can access, what data it may retrieve, or which actions require escalation. Embedding these rules directly into the agent runtime—rather than relying solely on prompts—creates more robust safeguards against misuse or unintended behavior.

- Finally, cost controls are essential for sustainable deployment. Agent-based systems can generate unpredictable expenses due to tool calls, long reasoning chains, or multi-agent coordination. Enterprises should implement usage limits, cost-aware routing, and periodic performance reviews to balance capability with budget discipline.

Together, these practices transform AI agents from experimental prototypes into dependable enterprise systems that leadership can confidently scale.

Future of AI Agents: From Digital Workers to Autonomous Organizations

The future of AI agents promises remarkable advancements. The rise of agent swarms is expected, potentially leading to AI managers overseeing these intelligent entities. Moreover, integration with Enterprise Resource Planning (ERP), Customer Relationship Management (CRM), and DevOps stacks will further cement the role of AI agents in business processes.

By 2030, it is projected that AI agents may handle up to 25% of knowledge work tasks, as per PwC forecasts. This shift will redefine how enterprises operate, empowering organizations to focus on strategic initiatives while agents manage routine tasks.

Why Wow Labz Is the Right Partner for Building Enterprise-Grade AI Agents

Building AI agents that work reliably in real-world enterprise environments requires far more than connecting an LLM to a few tools. It demands deep expertise across architecture design, reasoning systems, memory management, security, and long-term operations. This is where Wow Labz stands apart.

At Wow Labz, we help organizations move from experimental agents to production-ready, governed, and scalable AI agent systems that deliver measurable business impact – focusing on modular architectures, robust reasoning pipelines, memory orchestration, and policy-driven autonomy. Our teams have hands-on experience implementing single-agent and multi-agent systems, integrating tools via secure APIs, embedding vector databases for long-term memory, and applying retrieval-augmented generation (RAG) patterns for accuracy and control. This ensures agents are not just intelligent, but predictable, auditable, and aligned with enterprise workflows.

We also bring strong depth in enterprise AI readiness. From observability and human-in-the-loop controls to access governance, cost optimization, and MLOps for continuous improvement, Wow Labz builds AI agents that can be safely deployed across critical functions like operations, compliance, customer support, analytics, and internal automation. Our approach balances autonomy with oversight—helping teams unlock speed and efficiency without compromising trust, security, or compliance.

Tap into our expert talent pool to build cutting-edge AI solutions.

If you are looking to enhance your operational capabilities, consider booking a Generative AI Agent Architecture Workshop or requesting a Proof of Concept (PoC) for AI agents. Connect with our AI architects today and explore the potential of agentic AI in your organization.

FAQs

What are AI agents?

AI agents are autonomous software entities that can independently execute tasks, learn from their environments, and make decisions toward achieving goals.

How are AI agents different from chatbots?

Unlike chatbots, which respond based on predefined scripts, AI agents utilize complex workflows to interpret data, reason about decisions, and perform actions autonomously.

Are AI agents safe for enterprises?

While AI agents present immense potential, they also pose risks such as tool misuse and hallucinations, making governance and oversight essential for safe deployment.

What tech stack is used for AI agents?

The tech stack can vary, but it generally includes machine learning frameworks, vector databases for memory storage, and APIs for tool interactions.

How long does it take to build an AI agent?

The timeline depends on the complexity of the agent and the specific use case, but initial iterations can take anywhere from weeks to several months to develop and deploy.