Agentic AI describes systems that go beyond reacting to commands. Unlike traditional AI, which simply responds to prompts, agentic AI can plan, reason, make decisions, and act toward achieving a goal. These intelligent agents do not just follow instructions. They work autonomously, taking initiative while adapting to changing situations.

Building these autonomous AI systems is no small feat. They need a robust, modular, and composable architecture that allows different functions to work together smoothly. The Agentic AI Stack provides exactly that, combining essential building blocks like perception, memory, reasoning, planning, and tool use to create truly independent, goal-driven systems. This layered approach ensures these agents can operate reliably, scale effectively, and continue learning over time.

In the sections ahead, we will break down each part of the agentic AI stack. You will see how perception, reasoning, memory, planning, tool use, and feedback mechanisms all come together to form the backbone of modern autonomous AI systems.

If you want to understand how tomorrow’s AI will think, act, and improve, then you are in the right place.

The Rise of Autonomous Systems and AI Agents

From Static Models to Dynamic Agents

Artificial Intelligence is undergoing a fundamental shift. We are moving from static models that react to prompts, to intelligent agents that act with purpose, learn from experience, and complete complex tasks independently.

While Large Language Models (LLMs) have made it easier for machines to process and generate human-like language, they remain reactive by design. They lack memory, initiative, and the ability to carry out multi-step actions unless guided at every step.

This is where autonomous AI agents come in. These systems are:

-

Proactive: They don’t wait for commands but can initiate tasks based on goals.

-

Adaptive: They adjust their strategies based on changing data or feedback.

-

Tool-using: They can call APIs, run code, browse the web, or trigger workflows.

-

Memory-enabled: They learn from past actions to improve future performance.

Put simply, agents represent a new era of dynamic, goal-driven intelligence.

Market Trends and Real-World Applications

The rise of agentic AI isn’t just a technical development, it reflects real demand in the market for systems that can do more with less oversight.

Organisations across sectors are already applying autonomous agents in meaningful ways:

-

Healthcare:

-

Personalised diagnosis support and treatment planning

-

Monitoring patient data to alert clinicians in real time

-

-

Finance:

-

Automating compliance checks and fraud detection

-

Assisting with real-time risk assessment and investment decisions

-

-

E-commerce:

-

Powering intelligent shopping assistants

-

Automating customer service and order tracking

-

-

Logistics:

-

Optimising delivery routes and warehouse management

-

Adapting to supply chain disruptions on the fly

-

-

Education:

-

Creating adaptive learning paths based on student progress

-

Supporting teachers with content recommendations and analytics

-

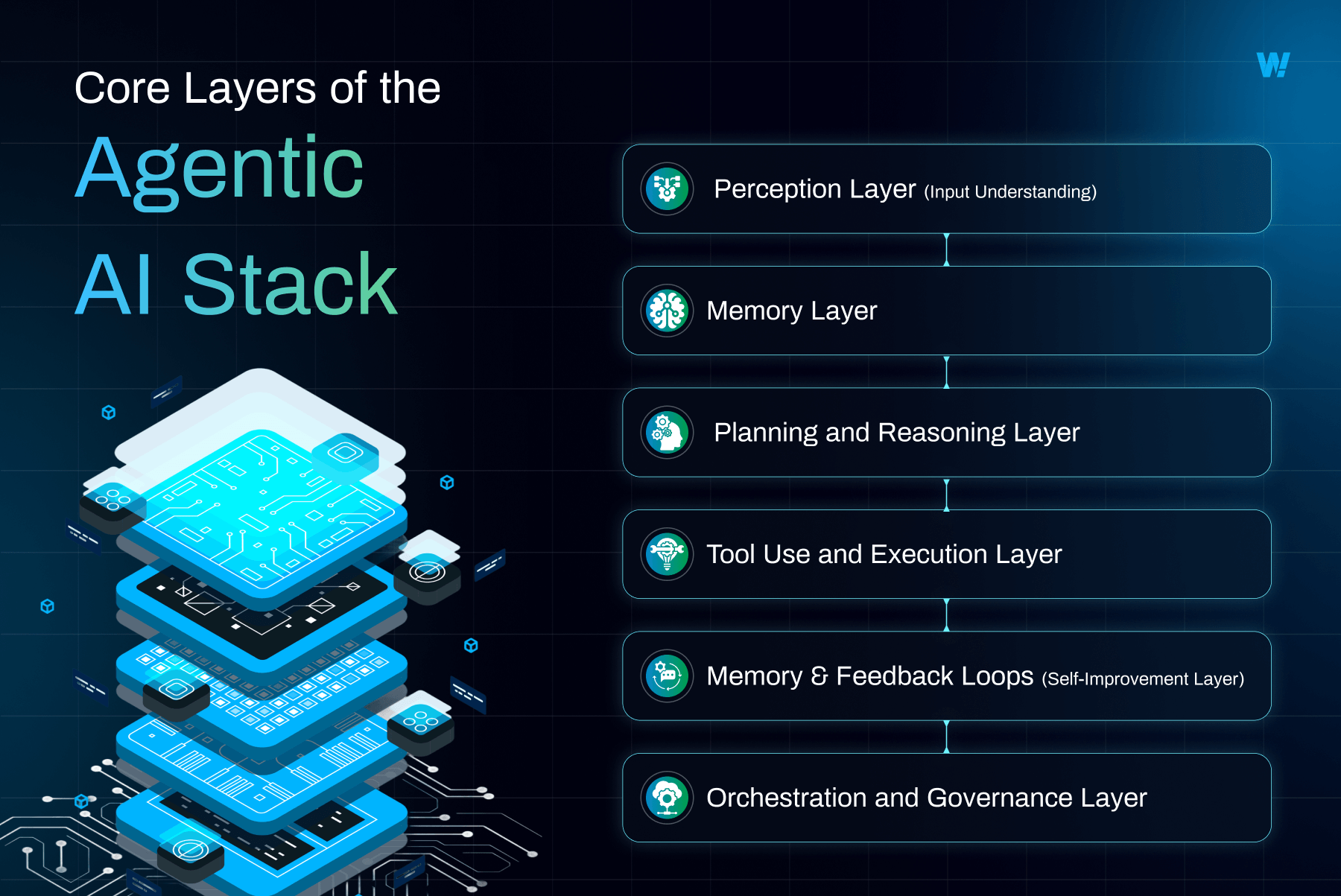

Core Layers of the Agentic AI Stack

At the heart of every autonomous system lies a carefully constructed architecture. The Agentic AI Stack is made up of several interconnected layers, each responsible for a distinct function in how an agent perceives, plans, acts, and learns. Let’s take a closer look at the core layers that give AI agents their intelligence and adaptability.

1. Perception Layer (Input Understanding)

This is the entry point of the agent’s interaction with the world. The Perception Layer is responsible for interpreting inputs, whether they are written, spoken, visual, or even physical.

Agents today are no longer limited to processing just text. Modern systems use multimodal AI to handle:

-

Text: Instructions, prompts, or documents

-

Voice: Natural language spoken by users

-

Images and Video: Visual information from cameras or files

-

Sensor Data: Inputs from IoT devices or real-world sensors

Pretrained vision-language models like CLIP (Contrastive Language–Image Pre-training) and Flamingo combine visual and textual understanding, enabling agents to understand what they see and read, all at once.

This layer gives the agent situational awareness, allowing it to make sense of diverse and often unstructured data.

2. Memory Layer

For an agent to act intelligently over time, it must remember, not just the current task, but also past interactions, user preferences, and contextual information.

The Memory Layer supports both:

-

Short-term memory: Information relevant to the current task or session

-

Long-term memory: Stored knowledge that persists across tasks or interactions

To manage memory efficiently, agents use vector databases such as:

-

Pinecone

-

Weaviate

-

FAISS (Facebook AI Similarity Search)

These systems store information in high-dimensional vector formats, making it possible to retrieve relevant context quickly and accurately.

This persistent context allows agents to behave consistently, maintain user history, and offer continuity across sessions much like a human assistant would.

3. Planning and Reasoning Layer

Once an agent understands its inputs and has access to memory, it must decide what to do next. The Planning and Reasoning Layer enables this decision-making.

At its core, this layer handles:

-

Task decomposition: Breaking down complex goals into smaller, manageable actions

-

Goal planning: Sequencing those actions to reach a desired outcome

-

Logical reasoning: Making decisions based on rules, probabilities, or learned strategies

Several planning frameworks have emerged to guide this process, including:

-

ReAct (Reason + Act)

-

Chain-of-Thought (CoT)

-

Tree of Thoughts

Agents often use decision trees and branching logic to evaluate different pathways and select the best course of action.

This is the layer where autonomous planning happens where an agent goes beyond simple responses and starts to reason like a strategist.

4. Tool Use and Execution Layer

Intelligent agents are not confined to internal reasoning. They can reach out into the world and take action, thanks to the Tool Use and Execution Layer.

This layer enables agents to:

-

Call external tools or APIs, such as:

-

Calculators

-

CRMs and databases

-

Web browsers

-

Scheduling systems

-

-

Trigger workflows across platforms, enabling end-to-end automation

Examples of agents that operate heavily in this layer include:

-

Auto-GPT

-

AgentGPT

-

LangGraph

By integrating with external systems, agents gain what’s called tool-augmented cognition. In other words, they extend their intelligence by using specialised tools — just as humans use spreadsheets or search engines to support their thinking.

5. Memory & Feedback Loops (Self-Improvement Layer)

True intelligence requires learning from experience. The Self-Improvement Layer enables agents to adapt over time, becoming smarter, faster, and more accurate.

This is achieved through:

-

Feedback mechanisms: Evaluating outcomes of past actions

-

Reward systems: Guiding behaviour based on success or failure

-

Reinforcement Learning: Continuously improving through trial and error

-

RLHF (Reinforcement Learning from Human Feedback): Aligning agent behaviour with human values and expectations

Agents in this layer don’t just execute plans — they reflect, adjust, and evolve. Over time, this leads to more effective decision-making and more trustworthy performance.

Each layer of the Agentic AI Stack builds upon the previous one. Together, they form a robust foundation for autonomous systems that are capable of perceiving, reasoning, acting, and improving much like a living, learning assistant tailored for real-world complexity.

6. Orchestration and Governance Layer

As autonomous systems become more complex, coordinating their behaviour and ensuring safety becomes essential. That’s where the Orchestration and Governance Layer comes in.

This layer focuses on managing agent workflows, especially when multiple agents are involved or when decisions carry high stakes. It ensures that agents:

-

Operate within clearly defined boundaries

-

Coordinate across tasks or systems without conflict

-

Follow appropriate decision protocols

In addition to managing workflows, this layer also deals with safety and reliability. A key concern in autonomous AI is preventing:

-

Hallucinations (when the agent generates false or misleading outputs)

-

Unintended actions that could impact users or systems adversely

To address these challenges, developers use orchestration and guardrail frameworks such as:

-

CrewAI – for managing collaborative multi-agent systems

-

LangChain – for building structured chains of tasks and tools

-

Autogen – for designing agent behaviours in controlled settings

-

Guardrails – for enforcing constraints and safety checks in output

By governing what agents can do, how they communicate, and where they must stop, this layer acts as the supervisory system — the conductor that keeps the orchestra in sync and on key.

Agentic AI Stack in Action: Real-World Architectures

The layers of the Agentic AI Stack become most meaningful when applied to real-world problems. Below are two examples that demonstrate how the stack comes together in practical settings: one focused on customer support, the other on research. These workflows highlight how agents use perception, memory, reasoning, tools, and feedback to carry out useful tasks in the real world.

Example 1: Customer Support Agent

In this use case, an AI agent handles customer queries by accessing prior interactions and relevant data from a CRM. The agent doesn’t just respond it reasons, recalls, and acts.

Workflow:

-

Input (Query):

A customer types a query into a support chat. This could be about an order, refund, or product issue. -

Perception Layer:

The agent interprets the query, extracting intent and relevant details. -

Memory Layer:

It accesses the customer’s history, previous tickets, and preferences via a vector database. -

Planning and Reasoning:

The agent identifies the steps required to resolve the issue — for example, checking order status or initiating a refund. -

Tool Use Layer:

It connects to the company’s CRM system (e.g. Salesforce or HubSpot) and retrieves or updates data as needed. -

Output Generation:

Finally, it composes a clear and helpful reply for the customer.

This type of agentic AI pipeline reduces manual workload, speeds up response times, and maintains consistent quality across interactions.

Example 2: Research Agent

In this scenario, an AI agent is tasked with collecting and summarising information from the web. It autonomously searches, processes, and refines knowledge in a structured loop.

Workflow:

-

Input (Prompt):

A user asks the agent to “Summarise the latest research on AI in healthcare.” -

Perception Layer:

The agent parses the request, identifying key terms and scope. -

Tool Use Layer:

It accesses search tools like a web browser or academic database API. -

Intermediate Reasoning:

The agent decides which sources are relevant, dismisses low-quality results, and retrieves content. -

Summarisation Module:

It distils findings into a digestible format using a summarisation model. -

Memory & Feedback Loop:

Based on user ratings or corrections, the agent updates its memory or adjusts future research strategies.

This agent behaves more like a personal analyst than a passive tool, capable of learning over time and refining its results based on user feedback.

Tools and Frameworks Powering the Stack

Agentic AI systems rely on a robust foundation of tools, frameworks, and models that support every layer of the stack from perception and planning to tool use, memory, and feedback. In 2025, the ecosystem has matured significantly, giving developers and researchers the infrastructure to build reliable, intelligent, and scalable agents.

A range of open-source and enterprise-ready frameworks are now available to support the development and orchestration of AI agents:

-

LangGraph – A flexible framework for building agent workflows using graph-based decision logic

-

CrewAI – Enables role-based multi-agent collaboration with structured task management

-

AutoGen – An extensible platform for LLM-driven agent workflows with human-in-the-loop support

-

MetaGPT – Inspired by software engineering principles to create modular, structured agents

-

ChatDev – Simulates an entire software company with coordinated agents across roles

-

Superagent, OpenAgents, and AgentVerse – Toolkits for rapid agent development, integration, and deployment

These frameworks bring orchestration, safety, and modularity to the forefront, allowing agents to interact, reason, and operate autonomously while remaining aligned with user goals.

If you want to read more about these frameworks, read this full article here: Agentic AI Frameworks: The Backbone of Autonomous Agents

Modern AI agents also require the ability to process multiple forms of input from text and voice to image and video. The Perception Layer of the stack is powered by advanced multimodal AI models, including:

-

GPT-4 (OpenAI) – For rich language and image understanding

-

AlphaCode (DeepMind) – Specialised in code-related tasks

-

ImageBind (Meta) – Merges text, image, audio, depth, and motion

-

Gemini 1.5 (Google DeepMind) – General-purpose multimodal model

-

Flamingo (DeepMind / Hugging Face) – Visual understanding and captioning

We’ve covered these multimodal AI models extensively in a separate blog, give it a read to learn more.

Want to Build with Agentic AI?

Thinking about building AI systems that don’t just respond but plan, reason, and act? Agentic AI lets you go beyond chatbots and into the world of goal-driven, autonomous workflows.

If you’re designing research copilots, smart support agents, creative collaborators, or autonomous business tools, Agentic AI gives your systems memory, decision-making, and initiative. These aren’t just tools. They’re intelligent agents working alongside you.

And with the right stack and team, you don’t need to start from scratch or settle for generic tools. You can build systems tailored to your exact workflows and business goals.

How Wow Labz Can Help You

Build Agentic AI Systems with Wow Labz

At Agentic AI Labz by Wow Labz, we specialize in building full-fledged agentic systems that combine planning, reasoning, memory, and action. If you’re creating AI copilots, autonomous assistants, or smart orchestration systems, we turn complex ideas into real, working products.

We’ve helped AI-first startups and global teams bring autonomous agents to life across industries like finance, edtech, healthcare, and SaaS.

Here’s how we can help you go agentic:

-

Deep expertise in planning models, memory management, and agent orchestration

-

Hands-on experience with top frameworks like LangGraph, CrewAI, MetaGPT, and AutoGen

-

End-to-end system design: research, architecture, tools, training, and deployment

-

Custom agents built around your data, goals, and environments

-

Real-world integration strategies that scale safely and efficiently

If you’re ready to build intelligent systems that can think, decide, and act—we’d love to help.

Let’s build your next-generation AI system together.