As companies dive deeper into the world of machine learning (ML), many face a common challenge: the failure of ML initiatives due to architectural misalignment rather than model performance. With Gartner reporting that over 60% of ML projects fail to reach production, the importance of making informed architectural choices cannot be overstated. Poor ML architecture can lead to a host of issues, including the inability to scale, high inference costs, slow iteration cycles, and data leakage or compliance risks. Instead of solely focusing on model accuracy, organizations must assess the broader context of data pipelines, model lifecycle management, deployment patterns, and governance controls.

This guide will equip CTOs, engineering leaders, ML engineers, and product managers with insights on how to choose the right ML architecture based on factors such as use case, scale, latency, data sensitivity, team maturity, and cost constraints. With the right architectural decisions, businesses can pave the way for long-term success and sustainable ROI in their ML initiatives.

What Is ML Architecture? A Practical Definition

ML architecture encompasses far more than just the model at its center. It refers to the entire framework that dictates how data flows, how models are trained and updated, and how predictions reach end-users reliably and efficiently. A well-designed ML architecture ensures scalability, maintainability, and performance across the lifecycle of machine learning systems. Key components of ML architecture include:

-

Data ingestion and preprocessing layers: These layers handle the collection, cleaning, transformation, and normalization of raw data, ensuring it is high-quality, structured, and ready for model consumption. Efficient data pipelines are critical for accurate model training and reliable inference.

-

Training and experimentation workflows: This component defines how models are developed, tested, and optimized. It includes experiment tracking, hyperparameter tuning, versioning, and iterative testing to ensure models evolve effectively while meeting performance benchmarks.

-

Model serving and inference layers: Serving layers manage how trained models are deployed and accessed by applications or end-users. This includes scaling inference requests, maintaining low-latency responses, and ensuring models perform reliably in production environments.

-

Monitoring, drift detection, and retraining loops: Continuous monitoring ensures model predictions remain accurate over time. Drift detection identifies changes in data patterns, prompting retraining or updates, which keep the ML systems aligned with real-world conditions.

-

Security, governance, and access controls: Robust ML architectures include mechanisms to secure data, protect model integrity, manage user permissions, and ensure compliance with regulatory requirements. These safeguards maintain trust and reliability throughout the ML lifecycle.

According to McKinsey, organizations with mature ML architectures are 2-3 times more likely to achieve sustained business impact from their AI initiatives. The right architecture not only enhances maintainability and scalability, but it also helps establish a clear return on investment.

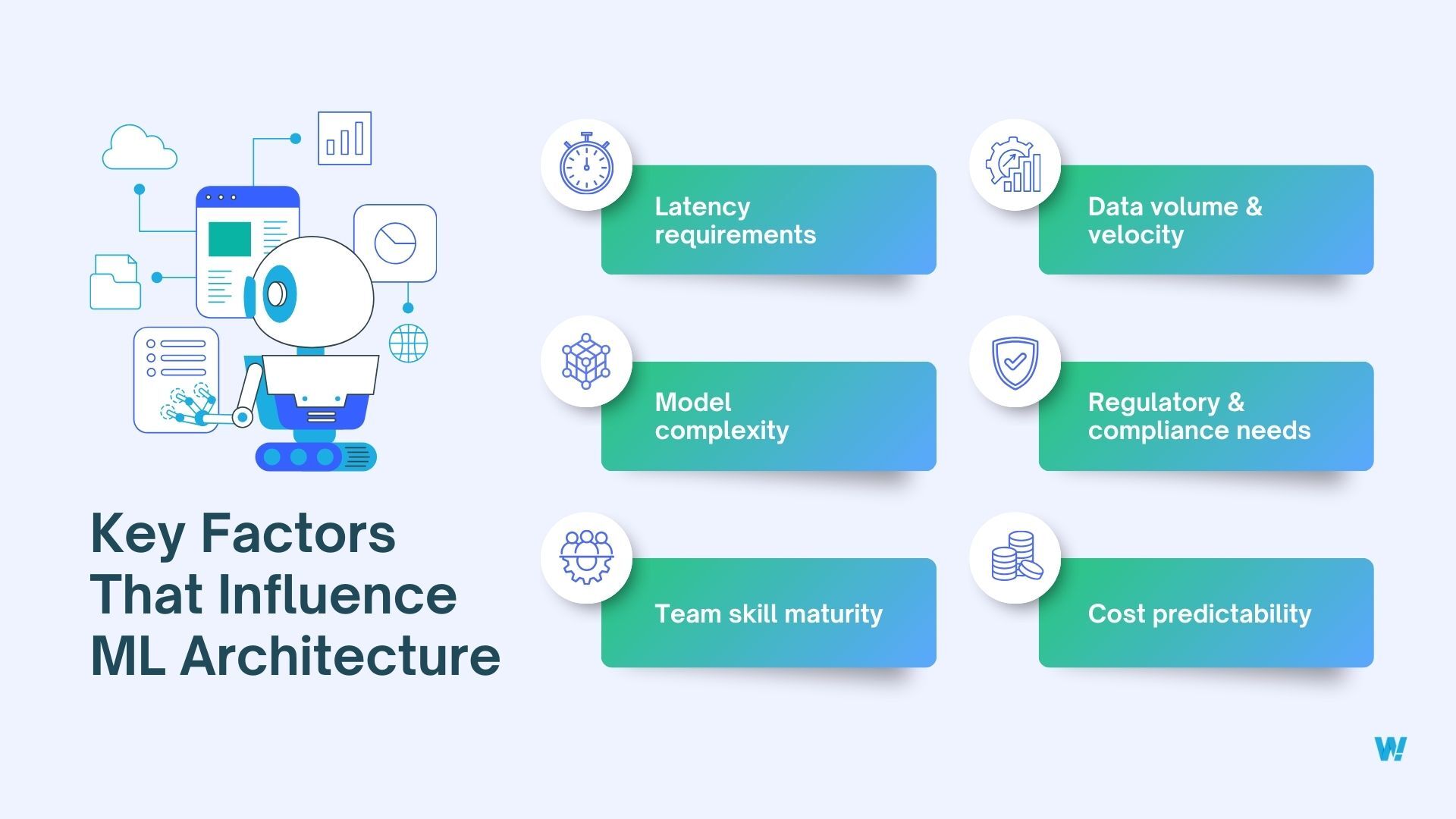

Key Factors That Influence ML Architecture Decisions

Choosing the right ML architecture is not a purely technical exercise—it’s a strategic decision that directly impacts system performance, scalability, compliance posture, and long-term cost. High-performing machine learning systems are almost always the result of aligning architectural choices with business realities, not just model accuracy. Below are the core factors enterprises must evaluate before locking in an ML architecture.

Latency Requirements (Real-Time vs Batch)

Latency expectations are often the first architectural constraint. Applications that require real-time inference—such as fraud detection, personalization engines, recommendation systems, or conversational AI—demand low-latency architectures optimized for fast model serving and efficient data pipelines.

In contrast, batch-based ML workloads (forecasting, reporting, demand planning, or model retraining) can tolerate higher latency and are better suited to offline training and scheduled inference.

Architectural implications:

- Real-time use cases favor online inference, model caching, edge deployment, and optimized serving layers.

- Batch workloads can leverage data lakes, distributed processing (Spark), and offline training pipelines.

Data Volume, Velocity & Variety

Data volume and velocity are equally critical. High-throughput environments—such as IoT platforms, fintech systems, or consumer apps with millions of users—demand scalable ML architectures capable of handling continuous data ingestion and streaming analytics. Systems dealing with slower-moving, structured datasets may benefit from simpler data warehouses paired with scheduled model execution.

Additionally, diverse data types—text, images, video, time-series—introduce architectural complexity.

Key considerations:

- Streaming data often requires event-driven architectures and real-time feature pipelines.

- Large datasets necessitate distributed training, scalable storage, and feature stores.

- Unstructured data increases dependency on specialized preprocessing and embedding pipelines.

Model Complexity & Lifecycle Needs

The complexity of the model itself also shapes architectural decisions. Lightweight models (e.g., linear models or decision trees) can often be deployed directly within application services. However, deep learning models, ensemble methods, or generative AI systems typically require dedicated ML infrastructure, GPU acceleration, and robust model orchestration layers to function reliably at scale.

Equally important is how often models need to be retrained, evaluated, and deployed.

Architectural impact:

- Complex models require GPU/TPU support, orchestration layers, and robust MLOps tooling.

- Frequent updates benefit from CI/CD-style ML pipelines with automated validation.

- Long-lived models require strong versioning, monitoring, and rollback mechanisms.

Regulatory, Privacy & Compliance Constraints

For many industries, especially healthcare, finance, and real estate, compliance is a non-negotiable architectural driver. Regulations such as GDPR, HIPAA, SOC 2, or financial compliance frameworks dictate where data can be stored, how it can be processed, and how decisions are audited. This often determines whether models can be cloud-hosted, must be on-premise, or require a hybrid deployment.

Data residency laws, auditability requirements, and explainability mandates often necessitate on-premise or hybrid ML architectures with strong governance, logging, and access controls built in from the outset.

Compliance-driven choices include:

- Data residency requirements influencing on-prem or private cloud ML architectures.

- Mandatory audit trails shaping logging, explainability, and governance layers.

- Privacy-first designs favoring data minimization and access-controlled pipelines.

Team Skill Maturity & Operational Readiness

An ML architecture is only as effective as the team operating it. Highly sophisticated architectures such as distributed training pipelines or real-time inference systems, require experienced ML engineers, data engineers, DevOps support, and introduce operational overhead that can overwhelm underprepared teams. Meanwhile, organizations with leaner teams may prioritize managed services or simpler architectures to reduce operational risk.

Organizations must honestly assess internal capabilities across data engineering, ML engineering, DevOps, and monitoring.

Practical alignment matters:

- Smaller teams benefit from managed ML platforms and simplified architectures.

- Mature teams can support custom pipelines, self-hosted models, and advanced orchestration.

- Skill mismatches often lead to fragile systems and stalled ML initiatives.

Cost Predictability & Scalability

Finally, cost is not just about infrastructure spend—it’s about predictability over time. Architectures that scale dynamically can deliver performance but may introduce high costs if not governed properly. Cost predictability must be evaluated early – some ML architectures scale linearly with usage, while others introduce volatile compute or storage costs. Aligning architectural choices with budget constraints ensures sustainability as models move from experimentation to production. It is imperative that decision-makers balance flexibility with financial control.

Cost-related architectural factors:

- Real-time inference and large models increase compute and serving costs.

- Batch processing enables cost-efficient scheduling and resource optimization.

- Well-designed architectures include usage caps, monitoring, and cost alerts.

Why This Matters

According to AWS benchmarks, real-time ML inference systems experience a 4–6 times cost increase if the architecture is not optimized for latency early in the project’s lifecycle. This highlights the need to make informed architectural choices based on these factors.

The most successful ML systems are not built by chasing the most advanced architecture—but by choosing the right-fit ML architecture aligned with business goals, data realities, regulatory needs, and team capabilities. Treating architecture as a strategic foundation—not an afterthought—is what separates scalable ML platforms from costly experiments.

Common Types of ML Architectures

There’s no one-size-fits-all solution when it comes to ML architectures. Here’s a high-level overview of the common architectural categories:

- Batch ML architectures

- Real-time/online ML architectures

- Streaming ML architectures

- Hybrid ML architectures

- Edge / on-device ML architectures

Understanding these types is essential to selecting the right architecture for specific applications.

Batch ML Architecture: When Offline Intelligence Is Enough

Batch ML architectures play a critical role in many data-intensive scenarios. They are generally suitable for applications such as:

- Demand forecasting

- Risk scoring

- Recommendation refreshes

- Analytics-heavy workloads

In a typical batch architecture, data is ingested periodically, offline training occurs, and inference jobs are scheduled at defined intervals. Results are then stored in databases or warehousing systems. The pros of this architecture include:

- Lower cost: Batch ML architectures optimize compute usage by running training and inference jobs on schedules rather than continuously. This allows teams to leverage off-peak cloud pricing, spot instances, and simpler storage patterns, keeping operational expenses predictable and manageable.

- Simpler infrastructure: Because batch systems do not require low-latency serving layers, real-time feature stores, or autoscaling endpoints, they are easier to design, deploy, and maintain. This simplicity reduces operational overhead and accelerates onboarding for data and ML teams.

-

Easier governance: Batch ML architectures simplify governance because data movement, model training, and inference happen on predictable schedules rather than continuously. This makes it easier to enforce data access controls, validate datasets before use, and document model versions for audits. Compliance checks, approvals, and reporting can be aligned with batch cycles, reducing operational risk and making regulatory reviews more straightforward compared to always-on real-time systems.

Yet, it is important to recognize its limitations:

-

Latency unsuitable for real-time use cases: Since predictions are generated at fixed intervals, batch architectures cannot respond instantly to user actions or live signals. This makes them unsuitable for applications like fraud detection, personalization, or conversational systems that demand immediate feedback.

-

Limited responsiveness to changing data: Batch pipelines may continue serving outdated predictions until the next scheduled run. In fast-changing environments, this delay can reduce model relevance and accuracy, especially when user behavior or market conditions shift rapidly.

For instance, Netflix reports that over 70% of its recommendation updates still rely on batch ML pipelines for efficiency. This showcases the importance of choosing batch processing where immediate feedback is not crucial, allowing businesses to benefit from lower costs and simpler operational processes.

Real-Time ML Architecture: Powering Instant Decisions

Real-time ML architectures are essential for applications demanding immediate feedback and insights. They are widely used in:

- Fraud detection

- Search ranking

- Dynamic pricing

- Conversational AI

These architectures involve real-time inference pipelines with components such as feature stores, low-latency model serving, and autoscaling infrastructure. The benefits include:

- Immediate personalization for users: Real-time inference enables applications to react instantly to user behavior, delivering context-aware recommendations, pricing, or responses that significantly improve user experience and engagement.

- Competitive advantage in responsive markets: Businesses can act on signals as they occur—detecting fraud, adjusting prices, or ranking content in milliseconds—allowing them to outperform competitors that rely on delayed, batch-based decision-making.

However, they can also have drawbacks:

- Higher infrastructure costs: Maintaining low-latency pipelines requires always-on compute, high-throughput data streaming, and autoscaling model servers, which can substantially increase cloud and operational expenses.

- Complex monitoring requirements: Real-time systems demand continuous observability across data freshness, model drift, latency spikes, and failure recovery, making monitoring and incident response more challenging than batch-based architectures.

According to Stripe, real-time ML models can reduce fraud losses by up to 40%, but they also increase infrastructure complexity significantly. A well-designed real-time architecture balances the cost of operations with the need for immediacy and precision, ensuring organizations can stay competitive and efficient.

Streaming ML Architecture: Continuous Learning Systems

Streaming ML architectures are designed for systems where data arrives continuously and insights must evolve in near real time rather than at fixed intervals. Unlike batch architectures that learn from historical snapshots or real-time systems that focus purely on instant inference, streaming ML enables models to adapt incrementally as new signals arrive. This makes it particularly effective for environments where user behavior, system states, or sensor data shift constantly and decisions must reflect the most recent context.

They are most applicable in scenarios like:

- IoT analytics

- User behavior modeling

- Log intelligence

These architectures, which often incorporate technologies such as Apache Kafka or Google Pub/Sub, rely on stream processors that compute features in real time, facilitating ongoing learning and adaptation. A notable statistic is that LinkedIn processes over 7 trillion events per day using streaming ML pipelines to enhance feed ranking, showcasing the technology’s effectiveness in rapidly adapting to user behavior

Hybrid ML Architecture: The Enterprise Default

Hybrid ML architectures have emerged as the most practical choice for enterprises operating at scale. Rather than committing fully to either batch or real-time systems, organizations blend both to balance responsiveness, cost efficiency, and operational stability. This approach is especially effective for teams modernizing legacy data platforms while still supporting real-time use cases like personalization, recommendations, or fraud detection. Most enterprises choose a hybrid architecture combining elements of both batch and real-time approaches. Most hybrid setups include:

- Batch training schedules

- Real-time inference pipelines

- Shared feature stores for data consistency

- Unified monitoring across systems

This pattern offers several advantages:

- Balanced cost and performance: By reserving real-time compute only for latency-sensitive workflows and handling heavy training in batch mode, enterprises avoid over-provisioning infrastructure while still meeting SLA requirements.

- Incremental modernization of systems: Hybrid architectures allow teams to layer real-time ML capabilities on top of existing batch pipelines, reducing migration risk and enabling phased adoption rather than disruptive rewrites.

- Flexible scaling options: Organizations can independently scale training, inference, and data pipelines based on demand, making it easier to support growth, seasonal spikes, or new ML use cases without re-architecting the entire system.

According to Databricks, over 65% of production ML systems today utilize hybrid architectures, reflecting their growing acceptance. Companies like Adobe have implemented hybrid models to optimize their Creative Cloud services, illustrating how a combination of approaches can lead to enhanced performance while maintaining budget control.

Edge ML Architecture: When Latency and Privacy Matter

Edge ML architectures are increasingly vital when dealing with applications requiring minimal latency and enhanced privacy.

This architecture is designed for scenarios where sending data to the cloud is either too slow, too expensive, or not permissible due to privacy constraints. Instead of relying on centralized inference, models are deployed directly on edge devices—bringing intelligence closer to where data is generated. This approach is increasingly relevant as applications demand instant responses, operate in low-connectivity environments, or handle highly sensitive user data. For many enterprises, edge ML is not just an optimization choice but a regulatory and user-trust requirement.

Common use cases include:

- Mobile apps

- Smart cameras

- Healthcare devices

- Industrial automation

| Architecture Type | Description | Key Use Cases | Benefits | Limitations / Considerations |

|---|---|---|---|---|

| Batch ML Architecture | Processes data in scheduled intervals; training and inference occur offline. | Demand forecasting, Risk scoring, Recommendation refreshes, Analytics-heavy workloads | Lower cost, simpler infrastructure, easier governance | Not suitable for real-time needs, limited responsiveness to changing data |

| Real-Time / Online ML Architecture | Provides instant inference on live data streams; low-latency pipelines. | Fraud detection, Search ranking, Dynamic pricing, Conversational AI | Immediate personalization, competitive advantage in responsive markets | Higher infrastructure costs, complex monitoring requirements |

| Streaming ML Architecture | Continuously ingests and processes data, allowing incremental model updates. | IoT analytics, User behavior modeling, Log intelligence | Continuous learning, adapts quickly to new data | Requires robust streaming infrastructure, operational complexity |

| Hybrid ML Architecture | Combines batch training with real-time inference to balance performance and cost. | Enterprise personalization, Recommendations, Fraud detection | Balanced cost and performance, incremental modernization, flexible scaling | Complexity in system integration, requires careful pipeline management |

| Edge / On-Device ML Architecture | Deploys models directly on devices to reduce latency and protect privacy. | Mobile apps, Smart cameras, Healthcare devices, Industrial automation | Minimal latency, enhanced privacy, offline functionality | Limited compute capacity on devices, model updates require careful deployment |

This architecture emphasizes on-device inference, which introduces unique design considerations. Models often need to be compressed through techniques like quantization or pruning to fit hardware constraints, and update mechanisms must be carefully planned to roll out improvements securely after deployment. According to Qualcomm, edge ML can reduce inference latency by up to 90% compared to cloud-only architectures, making it an attractive option for latency-sensitive applications. As organizations pivot toward privacy-preserving techniques, edge ML becomes essential for maintaining user trust while delivering responsive services.

Tap into our expert talent pool to build cutting-edge AI solutions.

Centralized vs Decentralized ML Architectures

When evaluating ML architectures, organizations must consider whether a centralized or decentralized approach is more fitting for their needs. Centralized ML platforms enable uniform governance and oversight, while decentralized pipelines promote agility and speed. Key trade-offs include:

-

Governance vs Agility: Centralized ML architectures provide strong governance through standardized tooling, shared policies, and consistent compliance enforcement. However, this often introduces approval bottlenecks and slower experimentation cycles. In contrast, decentralized teams can iterate faster and tailor models to specific business needs, but may sacrifice consistency and oversight.

-

Cost Visibility: Centralized platforms typically offer clearer cost tracking by consolidating infrastructure usage, licensing, and compute spend under a single umbrella. Decentralized ML architectures, while flexible, can fragment ownership of cloud resources and tools, making it harder for leadership to accurately monitor and optimize overall ML costs.

-

Duplication Risks: Without central coordination, decentralized teams may independently build similar feature pipelines, models, or tooling, leading to redundant effort and wasted spend. Centralized architectures mitigate this by promoting shared feature stores, reusable components, and organization-wide ML standards, reducing unnecessary duplication.

This balance between control and speed is a defining consideration when selecting the right ML architecture for enterprise-scale applications.

According to a study by Forrester, organizations with centralized ML platforms can reduce duplicated ML expenditure by as much as 30-40%. Companies such as Tesla have demonstrated the efficacy of decentralized systems that enable rapid prototyping while maintaining critical governance checkpoints, thus ensuring both innovation and compliance.

Designing Data & Feature Pipeline (feature stores, ETL, labeling)

A reliable ML architecture rests on its data backbone. Effective pipelines standardize how raw events and third-party datasets become features used in both training and inference. The core elements are: real-time ingestion (streaming), pre-processing and validation, a feature store for computed features (with deterministic, versioned transformations), dataset versioning for reproducibility, and labeling workflows that support active learning and human-in-the-loop annotation.

Design notes:

- Use immutable event logs and idempotent preprocessing to avoid silent drift.

- Keep training and serving transformations identical (use the feature store to serve the same feature code).

- Instrument lineage and data quality checks; a single corrupted feature can tank production models.

- Invest in tooling for active learning and sampling to prioritize labeling resources efficiently.

Operational best practices:

- Decouple model artifacts from serving logic; use a model registry and immutable artifact storage.

- Implement canary deployments and traffic splitting at the gateway level to validate model updates on a fraction of traffic.

- Employ request caching and result TTLs for idempotent queries to reduce load.

ML Architecture and Data Strategy Alignment

Aligning ML architecture with a well-defined data strategy is one of the most critical—but often underestimated—factors in building reliable machine learning systems. Even the most advanced ML architecture will underperform if it is powered by inconsistent, low-quality, or poorly governed data. A strong alignment ensures that models behave predictably in production and can scale alongside business growth.

-

Establishing feature stores for consistency: Feature stores act as a single source of truth for features used across training and inference. By standardizing feature definitions and reuse, teams reduce duplication, eliminate inconsistencies across models, and accelerate experimentation while maintaining reliability.

-

Implementing quality pipelines for data integrity: Robust data pipelines with validation checks, anomaly detection, and schema enforcement help ensure that incoming data remains accurate, timely, and complete. This minimizes silent data failures that can degrade model performance over time without obvious signals.

-

Preventing training–serving skew: Training–serving skew occurs when models are trained on data that differs from what they see in production. Aligning preprocessing logic, feature transformations, and data sources across environments ensures that model predictions remain stable and trustworthy after deployment.

When ML architecture and data strategy move in lockstep, organizations gain more predictable model behavior, faster iteration cycles, and greater confidence in scaling ML initiatives across teams and use cases. IBM highlights that data drift accounts for up to 45% of ML model performance degradation in production, underscoring the significance of the architecture-data strategy connection. By synergizing architectural design with data capabilities, organizations can significantly boost their models’ reliability and efficacy over time.

Tap into our expert talent pool to build cutting-edge AI solutions.

Security, Compliance & Governance in ML Architecture

As organizations adopt more complex ML architectures, security, compliance, and governance become foundational—not optional. Machine learning systems increasingly touch sensitive data, automate decisions, and operate at scale, making them subject to regulatory scrutiny and internal risk controls. A well-designed ML architecture must embed security and governance controls from day one rather than retrofitting them later.

Key considerations include:

- Data minimization: Collect and process only the data required for model performance. Where possible, apply anonymization or pseudonymization techniques to reduce exposure and limit regulatory risk under frameworks like GDPR and CCPA.

- Encryption & key management: Enforce TLS for data in transit and AES-256 for data at rest. Use cloud-native key management services or hardware security modules (HSMs) to securely store and rotate model artifacts, secrets, and API keys.

- Access controls: Implement role-based access control (RBAC) across model registries, feature stores, training pipelines, and inference endpoints. Clear separation of duties between data science, engineering, and operations teams helps prevent accidental or malicious misuse.

- Auditability: Maintain versioned datasets, model lineage, and immutable logs to support audits and incident investigations. Exportable audit trails are especially critical in regulated industries such as finance, healthcare, and insurance.

- Privacy-preserving techniques: For sensitive or distributed datasets, leverage approaches such as federated learning, on-device aggregation, or differential privacy to reduce raw data movement while preserving model quality.

Strong governance not only reduces risk but also builds trust with regulators, customers, and internal stakeholders—enabling ML systems to scale confidently across the enterprise. Research by Deloitte found that regulated industries spend 25-30% more on ML governance when architecture is not designed with compliance in mind, emphasizing the need for upfront planning. A well-designed architecture considers these challenges from the outset, substantially mitigating potential risks associated with data breaches and compliance failures.

Cost Optimization in ML Architecture

Cost management is a critical factor in ensuring the long-term sustainability and scalability of any machine learning initiative. Without careful planning, ML workloads—particularly large-scale training and frequent inference—can quickly become expensive and difficult to maintain. To optimize financial efficiency, organizations should consider several strategic approaches:

-

Understanding the split between training and inference costs: Training models, especially deep learning architectures, can consume significant computational resources, while inference costs scale with the number of predictions served. By clearly distinguishing these cost drivers, teams can prioritize optimization efforts where they have the most impact.

-

Implementing autoscaling practices: Dynamically scaling computational resources based on workload demand helps prevent over-provisioning during low-usage periods and ensures sufficient capacity when demand spikes. This not only reduces waste but also improves the overall responsiveness of ML systems.

-

Utilizing model tiering strategies: Deploying multiple versions of a model—for example, lightweight models for low-priority tasks and full-scale models for critical workloads—can reduce unnecessary computational load and lower operational costs without sacrificing performance.

-

Applying caching to reduce redundant computations: Frequently requested predictions or intermediate results can be cached to avoid repeated calculations. This practice not only speeds up response times but also reduces the recurring computational costs associated with high-volume inference tasks.

Poorly designed inference pipelines can lead to increases in ML costs by 3-5 times within the first year. Hence, cost optimization must be an ongoing focus throughout the ML architecture’s lifecycle. The approach must balance performance needs with budget constraints while enabling adaptability to shifting business priorities. By proactively addressing cost optimization, organizations can make their ML architectures more efficient, scalable, and financially sustainable, ensuring that AI initiatives deliver lasting value without exceeding budgetary constraints.

Common ML Architecture Mistakes to Avoid

- Over-engineering early: Simplicity often enhances speed and adaptability. Focus on building core capabilities and iterate as needed.

- Ignoring MLOps: Neglecting operational practices can lead to bottlenecks. Integrate MLOps into your workflows for better efficiency.

- Treating ML as stateless: This can complicate model versioning processes. Maintain robust version control systems for smoother management.

- Neglecting retraining plans: Models must evolve with shifting data landscapes. Regularly evaluate model performance and retrain accordingly.

- Architecture tied too closely to one vendor: This can restrict flexibility and options. Adopt open standards where possible to preserve agility.

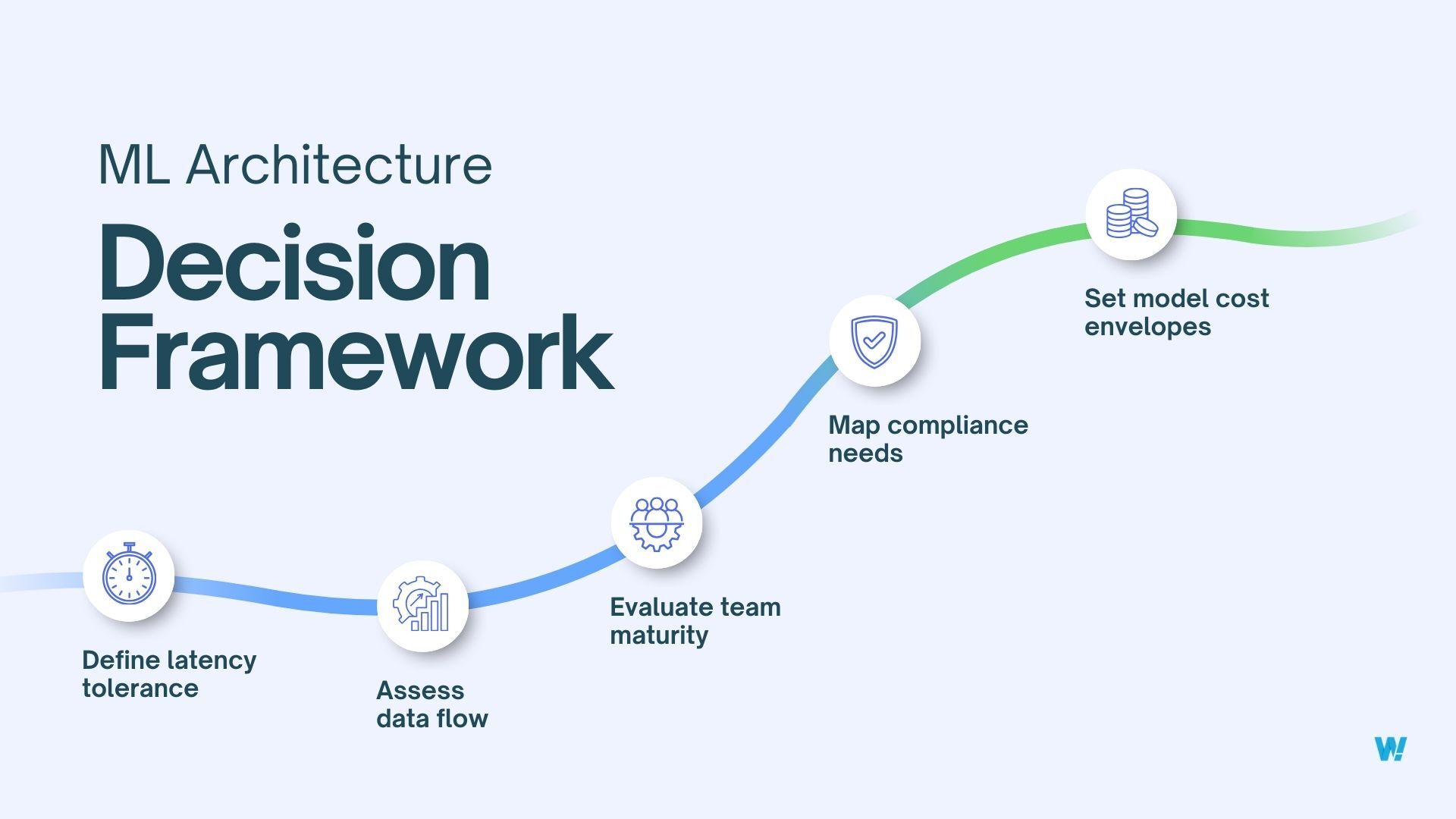

How to Choose the Right ML Architecture: A Decision Framework

Selecting the right ML architecture is less about chasing trends and more about making deliberate, context-aware decisions that align with business goals, technical constraints, and regulatory realities. A structured decision framework helps teams avoid costly re-architecture later while ensuring the system can evolve as models, data, and usage scale.

-

Define latency tolerance: Start by clearly defining how quickly your application must respond to users or downstream systems. Real-time use cases like fraud detection or recommendations require millisecond-level responses, while reporting or forecasting workflows may tolerate minutes or hours. This distinction immediately narrows your architectural options.

-

Assess data flow: Map how data enters, moves through, and exits your system—from ingestion and feature computation to training and inference. Understanding data velocity, volume, and transformation points helps identify whether batch, streaming, or hybrid pipelines are required and where potential bottlenecks may arise.

-

Evaluate team maturity: Your architecture should reflect your team’s operational readiness. Advanced setups like streaming pipelines, feature stores, or custom model serving require strong ML engineering, DevOps, and monitoring capabilities. Simpler architectures often deliver better outcomes when teams are still building ML maturity.

-

Map compliance needs: Regulatory and governance requirements can significantly influence architecture choices. Data residency rules, auditability, explainability, and privacy mandates may necessitate centralized controls, on-prem deployments, or edge inference to remain compliant across regions.

-

Set model cost envelopes: Establish clear cost boundaries for training, inference, storage, and experimentation. Defining acceptable cost ranges early ensures architectural decisions balance performance with predictability, while still allowing room to scale as usage and business value grow.

A thoughtful framework ensures your ML architecture supports not just today’s use case, but tomorrow’s growth as well.

Future Trends in ML Architecture

The ML architecture landscape is constantly evolving. Key trends to watch for include:

- Agentic ML systems that enable autonomous decision-making

- AutoML pipelines simplify development processes and enhance accessibility

- Serverless inference solutions are reducing infrastructure management complexities

- Foundation model-centric architectures focusing on flexibility and robustness across various applications

By 2027, IDC forecasts that over 50% of ML workloads will leverage hybrid or agent-based architectures, indicating a shift toward more versatile configurations. Companies that stay ahead of these trends will gain a competitive edge, positioning themselves for sustainable growth in the fast-evolving AI ecosystem.

Explore the Potential of ML Architecture

Designing the right machine learning architecture is more than just a technical decision—it’s a strategic choice that can determine the efficiency, scalability, and long-term impact of your AI initiatives. Whether you’re working with real-time, batch, or hybrid ML systems, selecting an architecture that aligns with your business goals ensures optimized performance, cost efficiency, and future-ready solutions. A thoughtful approach to ML architecture allows organizations to unlock the full potential of their data, streamline operations, and drive meaningful insights.

Partner with Wow Labz for Expert ML Architecture Design

At Wow Labz, we specialize in creating architecture-first ML solutions tailored to your unique requirements. Our team brings deep expertise in real-time, batch, and hybrid ML frameworks, coupled with a strong focus on MLOps, governance, and cost optimization. From designing scalable systems to implementing robust pipelines, we help enterprises establish machine learning architectures that are not only powerful but also sustainable for long-term growth. By partnering with us, you gain a trusted ally in transforming your generative AI strategy into actionable, high-impact results.

Ready to future-proof your machine learning initiatives?

Get in touch with Wow Labz today to explore how our expertise in ML architecture can help you build scalable, efficient, and high-performing AI systems tailored to your business needs. Contact us now and start your AI/ML transformation journey.

Tap into our expert talent pool to build cutting-edge AI solutions.